Stop Playing the Lottery with Your Ads: A/B Testing for Meteoric Conversion Rates!

Introduction

What is A/B Testing?

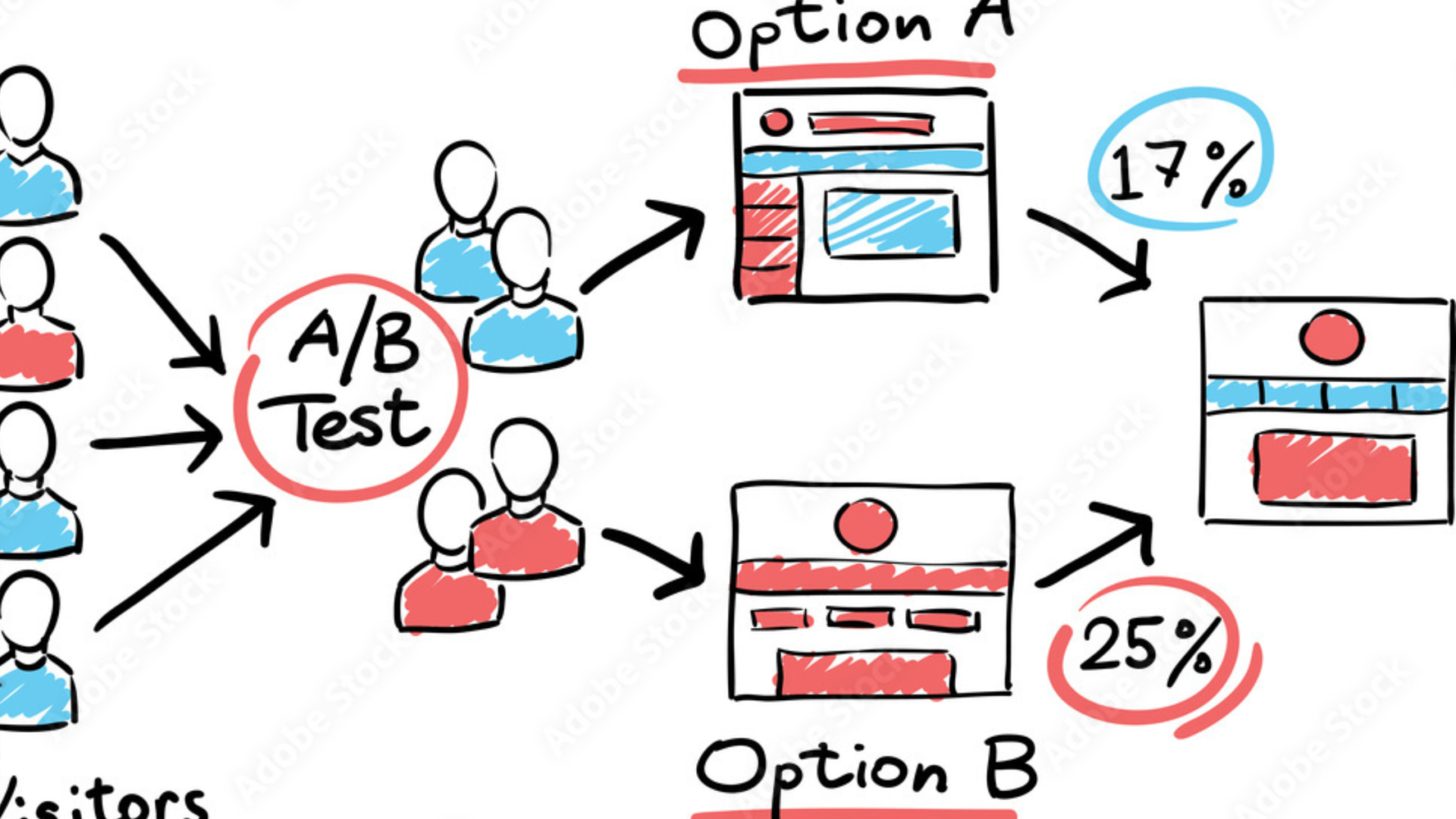

A/B testing, also known as split testing, is an experimental method used in digital advertising. At its most basic, it involves comparing two versions of the same web page – the ‘A’ and ‘B’ – to see which performs better. Each version is shown to a different segment of your website visitors and the conversion rates are then compared.

But, why does it matter?

Picture this: You’re a marketer who has just crafted the perfect marketing campaign. The visuals are stunning, the copy is catchy, and the landing page is a work of art. But for some reason, your conversion rates are far from satisfying.

The question here is, why? Are the subject lines off-putting? Is the call to action weak? Or is it simply that your target audience doesn’t care for the background color of the sign-up form? You’re playing the lottery with your marketing efforts, making guesses without knowing where you went wrong.

This is where A/B testing comes in, my friends. It’s time to stop guessing and start testing!

The Importance of A/B Testing

No marketing team wants to see their well-crafted marketing campaign generate inconclusive results. Yet, without a well-defined process of testing, you’re merely throwing things at the wall and seeing what sticks. You need data to drive your decisions, and this is where split testing steps into the spotlight.

B testing doesn’t just improve your website’s performance by increasing conversions; it makes your team smarter. It allows you to understand your audience’s behavior and preferences, helping you deliver more impactful marketing campaigns in the future. Plus, as you gather more reliable data from your tests, you can begin testing multiple variables in a multivariate test for even greater insights.

The Science Behind A/B Testing

Understanding the Mechanics of A/B Testing

In a typical A/B test, you start with a hypothesis. For instance, you might think that changing the color of your call to action button from blue to red will increase the click-through rate.

Next, you create two versions of the same web page. The first version (A) remains unchanged, serving as your control group. The second version (B) features one changed element – in this case, the color of the call-to-action button.

After running the test and collecting data, you analyze your test results to see if there’s a statistically significant difference between the two. Did version B outperform version A in a way that wasn’t just down to chance?

A/B Testing: One Variable at a Time

A key principle in A/B testing – one that’s sometimes overlooked – is to test one element at a time.

Let me tell you a story from my own experience. Early in my marketing career, I once ran an A/B test where I simultaneously changed the heading, image, and call to action on a landing page. While I saw a change in conversion rates, I had no idea which of the changes had caused it. Was it the heading, the image, the call to action, or a combination of all three? I ended up with statistically significant results, but they were practically meaningless because I had no idea what had worked and what hadn’t.

So remember, test one variable at a time. It’s not as speedy as throwing everything at the wall, but it gives you clear, actionable insights.

Making Sense of Statistical Significance

In A/B testing, achieving statistically significant results is the primary focus.

What does this mean?

Imagine tossing a coin twice and getting heads both times. Can you conclude that the coin will always land on heads? Of course not. But what if you tossed the coin 1,000 times and got heads 750 times? Now there’s a trend that’s hard to ignore.

Statistical significance is the same concept. It tells you whether your test results could have happened by chance or if they represent a real trend. That’s why it’s crucial to have a large enough sample size for your tests. Without it, you might be making decisions based on random noise rather than real insights into user behavior.

Stay tuned for a step-by-step guide to conducting your own A/B tests. It’s time to stop playing the lottery with your marketing campaigns and start making data-driven decisions for meteoric conversion rates!

Stay tuned for Part III where we’ll delve into ‘Why A/B Test Your Digital Ad Creative?’

Why A/B Test Your Digital Ad Creative?

The Power of A/B Testing in Driving Conversions

So why should you A/B test your digital ad creative? Simple. It can revolutionize your conversion rates. No more hoping, guessing, or predicting what will work. With A/B testing, you’re basing decisions on hard data. This method shows you clearly what resonates with your audience, which can lead to some pretty impressive upticks in your click-through and conversion rates.

Imagine that you’ve just launched a new product line. You design two versions of the ad creative, one with a celebrity endorsement and another focusing solely on the product’s features. How would you know which will get more traffic and conversions? Yep, you guessed it – an A/B test. A well-executed A/B test will show you which ad creative is the most optimized version for your target audience.

The Evidence: A/B Testing Case Studies

I once worked with a company that was struggling to generate leads from their landing page. They’d tried everything they could think of: tweaking their call to action, playing with the layout, even changing the color scheme. Nothing seemed to make a significant difference.

Finally, they decided to A/B test the landing page. For version A, they kept the existing page design. For version B, they introduced a personal story from the CEO in the content, keeping all the other elements the same. The result? A 30% increase in sign-ups on version B.

This isn’t a one-off example. There are countless case studies out there showing the impact of A/B testing on conversion rates. The key takeaway is that, often, it’s the elements you overlook that make the most significant changes.

Debunking A/B Testing Myths

“But isn’t A/B testing just for tech giants with massive amounts of traffic?”

I’ve heard this question many times. The truth is, you don’t need millions of website visitors to run meaningful A/B tests. Sure, more data is always helpful. But even smaller sites can reap the benefits of A/B testing. As long as you have enough traffic to achieve statistically significant results, you’re good to go.

Remember, even small improvements to your conversion rate can make a big difference over time. So, don’t write off A/B testing as something only for the big players. If you’re serious about maximizing your marketing strategy, A/B testing should be part of your arsenal.

How to Conduct an A/B Test

Setting Up Your A/B Test

So how do you conduct an A/B test?

First, identify your goal. This could be anything from increasing the click-through rate on your sign-up form to boosting the conversion rate on your landing page. This desired outcome will shape your test hypothesis. For example, you might hypothesize that adding customer testimonials to your landing page will increase conversions.

Second, create your two versions: A (the control) and B (the variant). Remember, only one element should differ between the two versions. This could be anything: the headline, a subject line, an image, the call to action, etc.

Finally, split your traffic between the two versions. Use a tool like Google Analytics to ensure that you’re dividing your existing traffic evenly and randomly. This is key to ensuring that your test results are reliable.

Running Your Test and Analyzing the Results

Next, let your test run. Be patient here. You want to collect enough data to make your results statistically significant.

Once your test concludes, it’s time for analysis. Check out the conversion rates for both versions. If one outperforms the other in a statistically significant way, you’ve found your winner!

If your test succeeds, great! You’ve found a proven way to boost your conversion rates. If your test fails, don’t despair. Even a failed test provides insights into what doesn’t work for your audience. Either way, you’re gaining valuable knowledge about your audience’s preferences and behaviors.

The Importance of Continual Testing

It’s crucial to understand that A/B testing is not a one-off process. Your first test might bring significant changes, but the digital landscape is always evolving. User behavior changes, new trends emerge, and what worked once might not work forever.

So keep testing. Test different elements, on different pages, and with different segments of your audience. The more you test, the more you’ll understand your audience, and the better your marketing campaigns will perform.

In Part V, we’ll delve into the best practices for A/B testing and how to avoid common pitfalls. So stay tuned!

Best Practices for A/B Testing

Start with a Clear Hypothesis

Every A/B test begins with a hypothesis. Maybe you believe a more engaging subject line will increase email open rates, or perhaps changing the color of your “Buy Now” button will increase click-through rates. Whatever it is, ensure your hypothesis is clear and testable.

Test One Element at a Time

Remember my earlier story where I made simultaneous changes to a landing page and ended up scratching my head, unsure which change led to the improvement? It’s a classic mistake. Testing multiple variables at once might seem like a time-saver, but it muddies the waters. So, stick to changing one element at a time to gain clear, actionable insights.

Allow Enough Time for Your Test

Patience, my friends, is a virtue in the world of A/B testing. Your test must run long enough to collect a substantial amount of data. It might be tempting to call a winner after a few days, but doing so could lead you astray. Remember, achieving statistical significance is crucial, so give your test the time it needs.

Test, Learn, Apply, Repeat

A/B testing isn’t a “set it and forget it” type of deal. It’s a cycle. Test an element, learn from the results, apply changes based on those results, and then start a new test. Continuous testing lets you adapt your marketing strategy as user behavior and preferences change, keeping your campaigns fresh and effective.

Tell Us Your Goals

Send us your contact info and we'll follow up with a quick call to find out more about your goals and talk about how Clicks and Clients can help.

Common Pitfalls in A/B Testing and How to Avoid Them

Testing Too Many Things at Once

Yes, I’ve said it before, and I’ll say it again because it’s that important. Avoid the temptation to test multiple versions of a webpage with multiple changes in each version. Not only does this require a lot more traffic to achieve statistically significant results, but it also muddies the waters, making it difficult to pinpoint what caused any changes in performance.

Ending Your Test Too Early

Another common pitfall is ending your test too soon, especially if you see significant changes early on. While it might be exciting to see drastic improvements right off the bat, it’s essential to allow your test to run its course and collect enough data. Otherwise, your results may be skewed by randomness, not real trends.

Ignoring Small Wins

You might be dreaming of that one test that skyrockets your conversion rates overnight. But don’t dismiss the power of small, incremental improvements. A 1% increase in your conversion rate might not seem like much in isolation, but over time, these “small wins” can compound into significant gains.

Not Considering Mobile vs. Desktop

User behavior often varies between mobile and desktop. So, don’t forget to factor this into your testing methods. What works for your mobile audience might not resonate with your desktop users, and vice versa. Consider running concurrent tests for mobile versus desktop to ensure that your website is optimized for all users.

Overgeneralizing Your Test Results

Finally, it’s essential not to overgeneralize your test results. Just because a particular page design worked for one product or service doesn’t mean it’ll work for another. Each A/B test is specific to a particular element, on a particular page, for a particular audience. So while A/B testing can provide valuable insights, don’t assume that what works in one context will work in another.

Stay tuned for the conclusion where we’ll wrap up everything you’ve learned about how to A/B test digital ad creative to maximize conversion rates!

A/B Testing on Facebook: Mastering the Split Test

A. Setting Up Your Facebook Split Test

Facebook’s testing methodology often goes by the term “split testing.” This setup allows you to run multiple tests at the same time, providing a comprehensive understanding of how different variables perform against each other.

To set up a split test on Facebook, start with the creation of a single campaign. Within this campaign, create multiple ad sets, each one testing a different variant. Variants can be anything from audience demographics, ad placement, and delivery optimizations, to creative aspects of the ad like headlines, images, or call to action.

One of these ad sets should serve as your control group, a baseline version that remains constant for comparative purposes. It’s recommended to set a budget that’s about three times your target CPA for each ad set. This allows for sufficient data collection while optimizing for the lowest cost.

B. What Can You Test on Facebook?

Facebook provides an abundance of options for split testing. Here are some ideas:

-

Audience: Segment your audience based on demographics, interests, behavior, location, and more. You could also test lookalike audiences versus custom audiences to see which performs better.

-

Ad Placement: Test the performance of your ad across different placements, such as Facebook’s news feed, Instagram’s feed, or the Audience Network.

-

Creative Elements: Experiment with different images, headlines, ad copy, and calls to action to see what resonates with your audience.

-

Delivery Optimization: Test different bid strategies and optimization goals to find out what yields the best results for your campaign.

C. Analyzing Your Facebook Split Test Results

Upon completion of your split test, it’s time to dive into the data. Key metrics to consider include cost per thousand impressions (CPM), click-through rate (CTR), cost per click (CPC), thumb stop rate, and cost per result. Comparing these metrics across your ad sets will reveal the best-performing variants and guide your future campaigns.

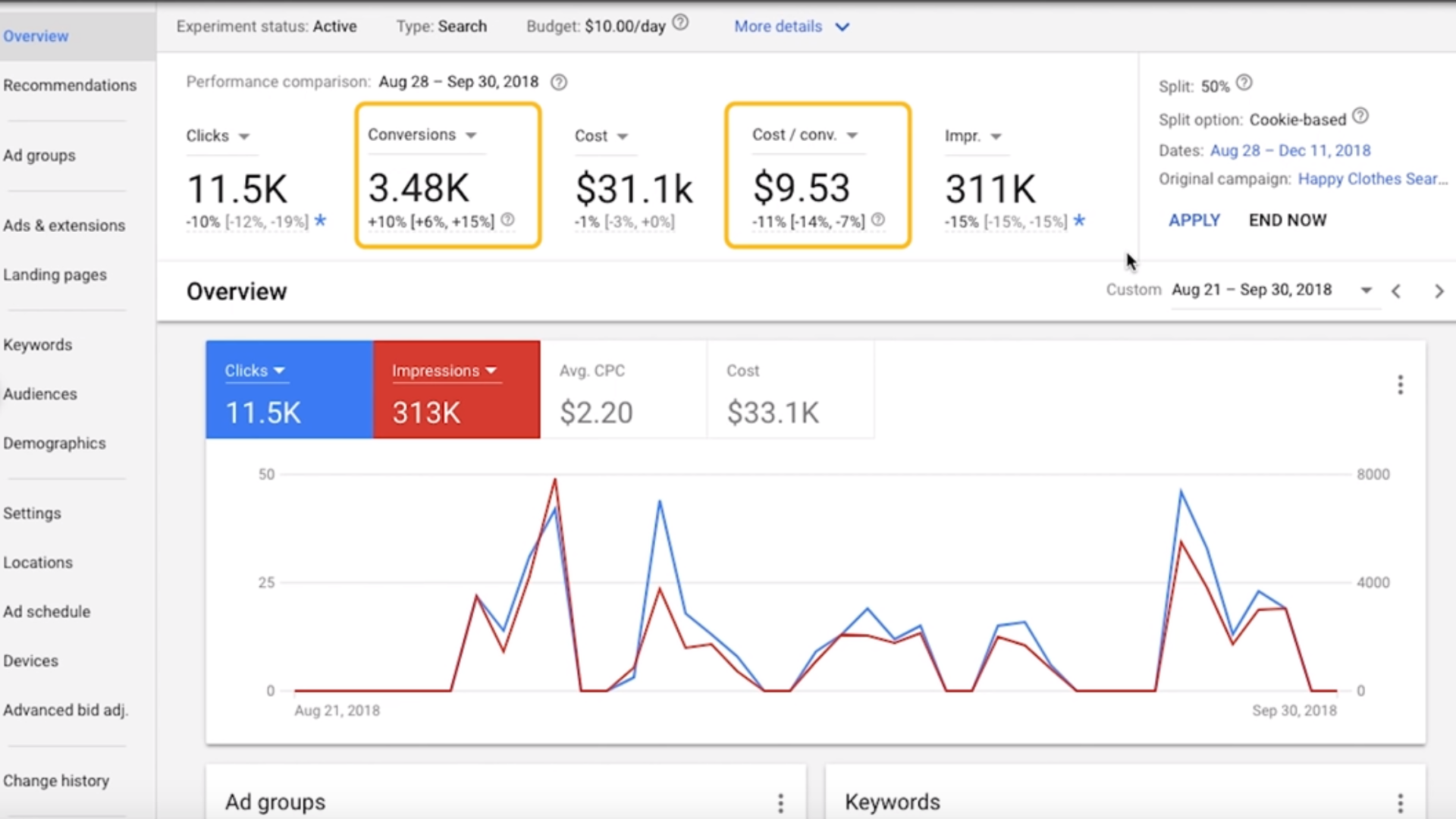

A/B Testing on Google Ads: Harnessing the Power of Experiments

Setting Up Your Google Ads Experiment

A/B testing on Google Ads is accomplished through a feature called “Experiments.” This feature offers an intuitive process: you select the variable you wish to test, specify the metrics you want to improve, and choose the duration of your test.

B. What Can You Test on Google Ads?

-

Ad Variations: Test different headlines, descriptions, display paths, and final URLs in your ads to see which ones drive the best performance.

-

Landing Pages: Test different landing pages to see which ones lead to higher conversion rates.

-

Bid Strategies: Try out different bid strategies to see which ones yield the best cost-per-acquisition or return on ad spend.

-

Ad Schedules: Test different ad schedules to see when your ads perform best.

C. Evaluating Your Google Ads Experiment Results

After the test’s run period, Google Ads provides a detailed analysis of the results. It clearly showcases the impacts of the changes you tested. Remember, even if the test fails to yield an improvement, it’s a chance to learn and refine your marketing efforts.

A/B testing is the bridge between guesswork and data-driven decision making. Whether it’s Facebook or Google Ads, the practice equips you with the knowledge to optimize your marketing strategy. Test, learn, adapt, and conquer the digital advertising world.

A/B Testing Tools and Resources

Choosing the Right A/B Testing Tool

Now that you’re equipped with the knowledge of how to A/B test, you might be wondering, “How do I actually run these tests?” Well, there’s a variety of tools out there to help with this.

Google Optimize, for instance, is a popular choice. It integrates seamlessly with Google Analytics, allowing you to easily set up experiments and analyze the results all in one place.

Optimizely is another fantastic option, offering features such as multivariate testing and audience targeting. If you’re running an eCommerce store, a tool like VWO might be a good fit as it’s designed with online retail in mind.

Ultimately, the best tool for you depends on your specific needs and budget. So, spend some time researching to find the right fit.

Educate Yourself and Your Team

A/B testing is a science, and like any science, it can be complex. Luckily, there are plenty of resources out there to help you and your marketing team become testing gurus.

Online courses, webinars, blog posts, and eBooks can all help you deepen your understanding of A/B testing. You might even consider attending conferences or networking events focused on conversion rate optimization. Knowledge is power, and the more you know, the more effective your A/B tests will be.

Wrapping it Up: Embrace a Culture of Testing

The Mindset Shift

A/B testing isn’t just a technique. It’s a mindset. It’s about letting go of assumptions and letting data guide your decisions. Embracing this mindset can lead to impressive improvements in your conversion rates and overall marketing performance.

Once you start testing, it’s hard to stop. You start to see your marketing efforts as a living, evolving entity, something that can always be tweaked and improved. That’s the magic of A/B testing. It’s not just about the increases in conversion rates, important as they are, it’s about the relentless pursuit of better.

The Impact of A/B Testing on Your Business

Imagine a world where you’re not guessing what your audience wants, but know it. You’re confident in your marketing decisions because they’re backed by data. This is the power of A/B testing. And the impact extends beyond your marketing team. When you can clearly demonstrate the ROI of your marketing efforts, it fosters trust and collaboration across your entire organization.

Keep Testing, Keep Improving

Finally, remember that A/B testing is not a one-and-done deal. It’s an ongoing process, a never-ending journey towards better understanding your audience and delivering more impactful marketing campaigns. So, keep testing, keep learning, and most importantly, keep improving. Because in the world of digital marketing, learning never stops.

Congratulations! You’ve just taken a massive step towards becoming an A/B testing expert. Now, go out there and let your data do the talking!

Tell Us Your Goals

Paul Rakovich

Browse All PostsTell Us Your Goals

Recent Posts

- Google PMax: Get the Most Out of Your Ad Campaigns While Combating Fraud

- Lead Ads Don’t Have to Suck: How to Leverage Them Correctly

- Why More Expensive PPC Ads are Almost Always Worth It

- Unlock the Secrets of Organic CPA: The Ultimate Guide to Cost Per Acquisition

- Secrets We’ve Learned from Years Working with Paid Account Reps at Meta, TikTok, LinkedIn and Google